📖 Some common functions#

⏱ | words

References and additional materials

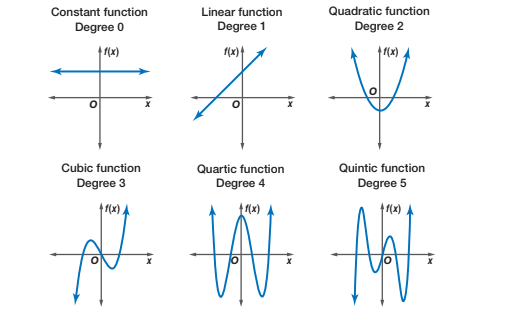

Common types of univariate functions include the following:

Polynomial functions

These include constant functions, linear (and affine) functions, quadratic functions, and some power functions as special cases.

Exponentional functions

Power functions

Logarithmic functions

Trigonometric functions

We are unlikely to have enough time to cover trigonometric functions in this course.

There are also multivariate versions of these types of functions.

Polynomial functions#

Fig. 16 Graphs of polynomial functions#

Definition

A polynomial function (of one variable) is a function of the form

In order to distinguish between different types of polynomials, we will typically assume that the coefficient on the term with the highest power of the variable \(x\) is non-zero. The only exception is the case in which this term involves \(x^0 = 1\), in which case we will allow both \(a_0 \ne 0\) and \(a_0 = 0\).

Example

A constant (degree zero) polynomial (\(a_0 \ne 0\) or \(a_0 = 0\)):

Example

A linear (degree one) polynomial (\(a_1 \ne 0\)):

It is sometimes useful to distinguish between linear functions and affine functions.

Example

A quadratic (degree two) polynomial (\(a_2 \ne 0\)):

Example

A cubic (degree three) polynomial (\(a_3 \ne 0\)):

Affine and linear functions#

Note that strict definition of a linear function \(f(x)\) requires \(f(\alpha x) = \alpha f(x)\) and \(f(x+y) = f(x) + f(y)\), where \(\alpha, x, y \in \mathbb{R}\).

However, we often loosely speak about a linear function of one variable being a function of the form \(f(x) = a_1 x + a_0\), where \(a_0 \in \mathbb{R}\) and \(a_1 \in \mathbb{R} \setminus \{0\}\) are fixed parameters, and \(x \in \mathbb{R}\) is the single variable.

Clearly, when \(a_0 \ne 0\), the definition of a linear function is not satisfied. In fact, it satisfies a different definition, that of an affine function. Strictly speaking, linear functions are thus \(f(x) = a_1 x\), in which \(a_0 = 0\).

Using this more precise terminology, the family of linear functions is a proper subset of the family of affine functions. Note that we assume that \(a_1 \in \mathbb{R} \setminus \{0\}\) in both cases.

Exponential functions#

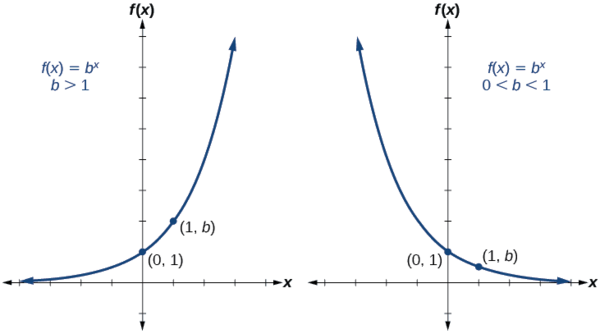

Fig. 17 Graphs of exponential functions#

Definition

An exponential function is a non-linear function in which the independent variable appears as an exponent.

where \(C\) is a fixed parameter (called the coefficient), \(a \in \mathbb{R}\) is a fixed parameter (called the base), and \(x \in \mathbb{R}\) is an independent variable (called the exponent).

Note that if \(a = 0\), then \(f(x)\) is only defined for \(x > 0\).

Note also that if \(a < 0\), then sometimes \(f(x) \notin \mathbb{R}\). An example of this is the case when \(C = 1, a = (−1)\) and \(x = \frac{1}{2}\). In this case, we have:

Popular choices of base#

Two popular choices of base for exponential functions are \(a = 10\) and \(a = e\), where \(e\) denotes Euler’s constant. Euler’s constant is defined to be the number

Note that Euler’s constant is an irrational number. This means that it is a real number that cannot be represented as the ratio of an integer to a natural number.

The function \(f(x) = e^x\) is sometimes called “the” exponential function.

Exponential arithmetic#

Assuming that the expressions are well-defined, we have the following laws of exponential arithmetic.

The power of zero: \(a^0 = 1\) if \(a \ne 0\), while \(a^0\) is undefined if \(a = 0\).

Multiplication of two exponential functions with the same base:

Division of two exponential functions with the same base:

An exponential function whose base is an exponential function:

Power functions#

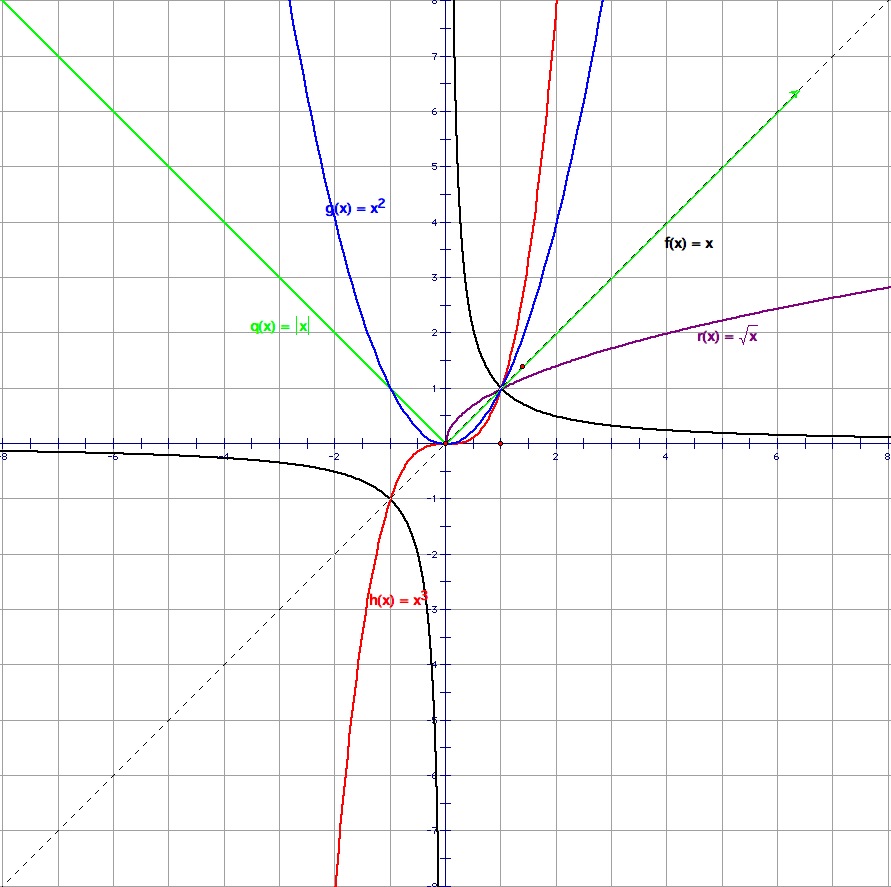

Fig. 18 Graphs of power functions#

Definition

A power function takes the form

where \(C \in \mathbb{R}\) is a fixed parameter, \(a \in \mathbb{R}\) is a fixed parameter, and \(x \in \mathbb{R}\) is an independent variable.

Note that when \(a \in \mathbb{N}\), a power function can also be viewed as a polynomial function with a single term.

Note that a power function can also be viewed as a type of exponential expression in which the base is \(x\) and the exponent is \(a\). This means that the laws of exponential arithmetic carry over to “power function arithmetic”.

Power function arithmetic#

Assuming that the expressions are well-defined, we have the following laws of power function arithmetic.

The power of zero: \(x^0 = 1\) if \(x \ne 0\), while \(x^0\) is undefined if \(x = 0\).

Multiplication of two power functions with the same base:

Division of two power functions with the same base:

A power function whose base is itself a power function:

A rectangular hyperbola#

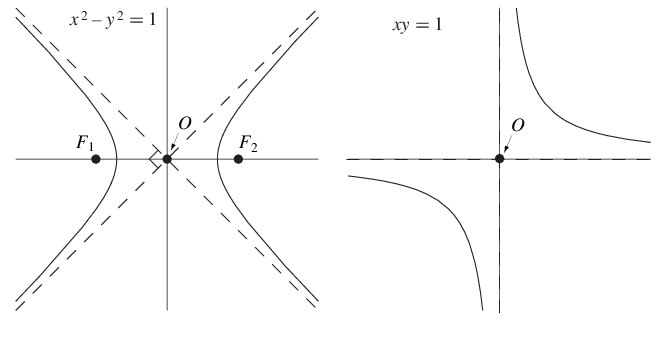

Fig. 19 Graphs of hyperbola#

Consider the function \(f : \mathbb{R} \setminus \{0\} \rightarrow \mathbb{R}\) defined by \(f(x) = \frac{a}{x}\), where \(a \ne 0\). This is a special type of power function, as can be seen by noting that it can be rewritten as \(f(x) = ax^{−1}\). The equation for the graph of this function is

Note that this equation can be rewritten as \(xy = a\). This is the equation of a rectangular hyperbola.

Economic application: A constant “own-price elasticity of demand” demand curve.

Logarithms#

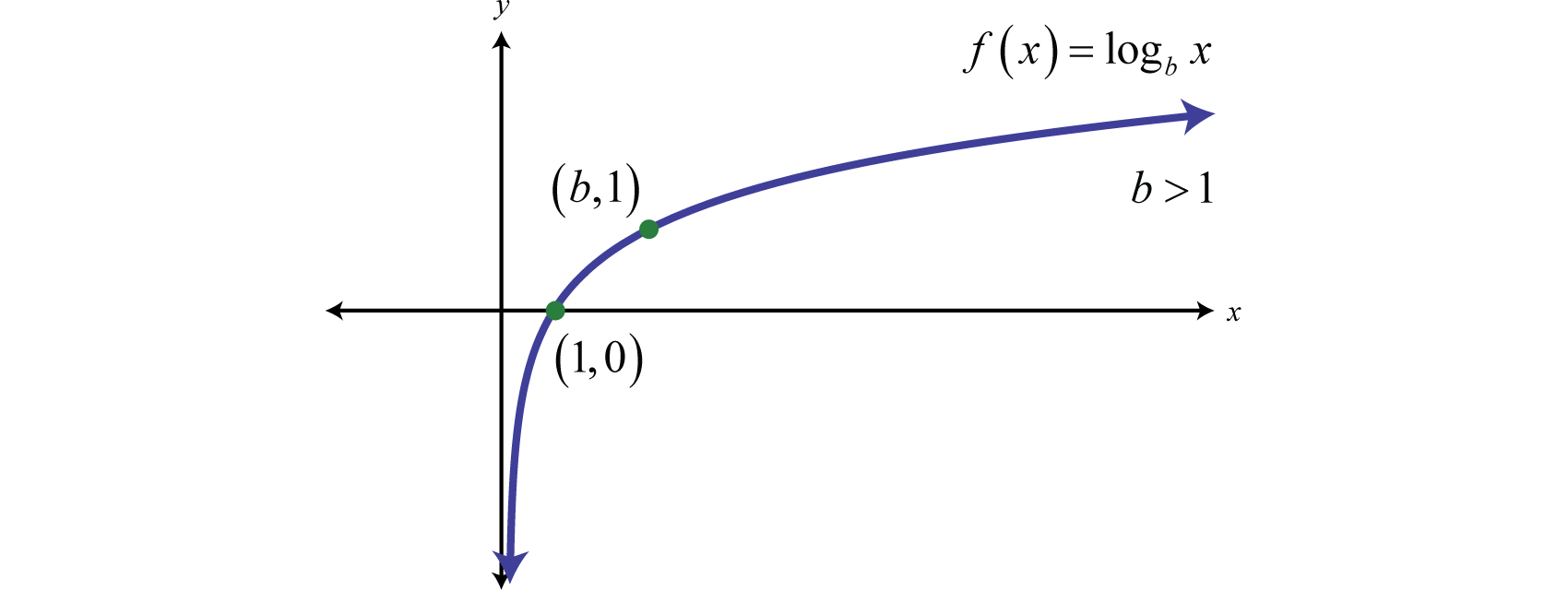

Fig. 20 Graphs of logarithmic function#

Definition

A logarithm function is the inverse of an exponent.

Thus we have

The expression \(log_a\) stands for “log base \(a\)” or “logarithm base \(a\)”. Popular choices of base are \(a = 10\) and \(a = e\).

The function

is the “logarithm base \(a\)” function. The “logarithm base \(a\)” function is the inverse for the “exponential base \(a\)” function. The reason for this is that

Natural (or Naperian) logarithms#

A “logarithm base e” is known as a natural, or Naperian, logarithm. It is named after John Napier. See Shannon (1995, pp. 270-271) for a brief introduction to John Napier. The standard notation for a natural logarithm is \(\ln\), although you could also use \(\log_e\).

The function

is the “logarithm base \(e\)” function. The natural logarithm function is the inverse function for “the” exponential function, since

Logarithmic arithmetic#

Assuming that the expressions are well-defined, we have the following laws of logarithmic arithmetic.

Multiplication of two logarithmic functions with the same base:

Division of two logarithmic functions with the same base:

A logarithmic function whose argument is an exponential function:

Note that

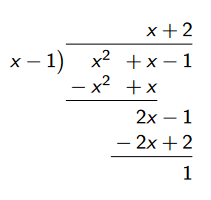

Rational functions#

Definition

A rational function \(R(x)\) is simply the ratio of two polynomial functions, \(P(x)\) and \(Q(x)\). It takes the form

where

is an \(m\)-th order polynomial (so that \(a_m \ne 0\)), and

is an \(n\)-th order polynomial (so that \(b_n \ne 0\)).

Note that there is no requirement that the polynomial functions \(P(x)\) and \(Q(x)\) be of the same order. (In other words, we do not require that \(m = n\).)

The most interesting case is when \(m < n\). In such cases, the rational function \(R(x)\) is called a “proper” rational function.

When \(m > n\), then we can always use the process of long division to write the original rational function \(R(x)\) as the sum of a polynomial function \(Y(x)\) and another proper rational function \(R^∗(x)\).

Some economic examples#

Utility functions

Production functions

Marshallian demand functions

Marshallian demand correspondences

Hicksian demand functions

Hicksian demand correspondences

Supply functions

Best response functions

Best response correspondences

Utility functions#

A utility function \(U : X \rightarrow \mathbb{R}\) maps a consumption set \(X\) into the real line. The consumption set \(X\) will typically be a set of possible commodity bundles or a set of possible bundles of characteristics.

Often, it will be the case that \(X = R^L_+\). Some examples include the following:

Cobb-Douglas: \(U(x_1, x_2) = x_1^\alpha x_2^{(1 − \alpha)}\) where \(\alpha \in (0, 1)\).

Perfect Substitutes: \(U(x_1, x_2) = x_1 + x_2\).

Perfect Complements (or Leontief): \(U(x_1, x_2) = \text{min}(x_1, x_2)\).

Marshallian demand functions#

An individual’s Marshallian demand function or correspondence \(d: \mathbb{R}^L_{++} \times \mathbb{R}_{++} \rightarrow \mathbb{R}^L_+\) maps the space of prices and income combinations into the space of commodity bundles. Example: Marshallian demands for Cobb-Douglas preferences in a two commodity world.

Here we have a Marshallian demand function:

Here we have a Marshallian demand correspondence: